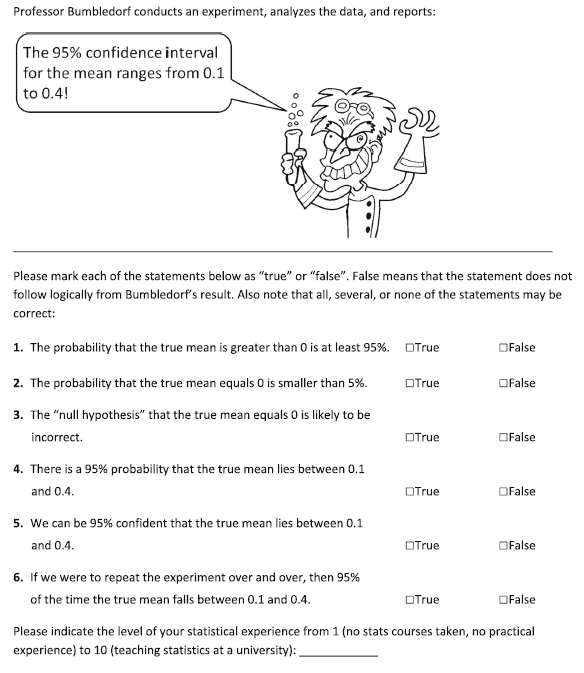

In a recent paper Hoekstra, Morey, Rouder, & Wagenmakers argued that confidence intervals are just as prone to misinterpretation as tradiational p-values (for a nice summary, see this blog post). They draw this conclusion based on responses to six questions from 442 bachelor students, 34 master students, and 120 researchers (PhD students and faculty). The six questions were of True / False format and are shown here (this is taken directly from their Appendix, please don’t sue me; if I am breaking the law I will remove this without hesitation):

Hoekstra et al. note that all six statements are false and therefore the correct response to mark each as False. [1] The results were quite disturbing. The average number of statements marked True, across all three groups, was 3.51 (58.5%). Particularly disturbing is the fact that statement #3 was endorsed by 73%, 68%, and 86% of bachelor students, master students, and researchers respectively. Such a finding demonstrates that people often use confidence intervals simply to revert back to NHST (i.e., if the CI does not contain zero, reject the null).

However, it was questions #4 and #5 that caught my attention when reading this study. The reason they caught my attention is because my understanding of confidence intervals told me they are correct. However, the correct interpretation of a confidence interval, according to Hoekstra et al., is “If we were to repeat the experiment over and over, then 95% of the time the confidence intervals contain the true mean.” Now, if you are like me, you might be wondering, how is that different from a 95% probability that the true mean lies between the interval? Despite the risk of looking ignorant, I asked that very question on Twitter:

Alexander Etz (@AlexanderEtz) provided an excellent answer to my question. His post is rather short, but I’ll summarize it here anyway: from a Frequentist framework (under which CIs fall), one cannot assign a probability to a single event, or in this case, a single CI. That is, the CI either contains μ (p = 1) or it does not (p = 0), from a Frequentist perspective.

Despite Alexander’s clear (and correct) explanation, I still reject it. I reject it on the grounds that it is practically useful to think of a single CI as having a 95% chance of containing μ. I’m not alone here. Geoff Cumming also thinks so. In his book (why haven’t you bought this book yet?) on p. 78 he provides two interpretations for confidence intervals that match my perspective. The first interpretation is “One from the Dance of CIs.” This interpretation fits precisely with Hoekstra et al.’s definition. If we repeated the experiment indefinitely we would approach an infinite number of CIs and 95% of those would contain μ. The second interpretation (“Interpret our Interval”) says the following:

It’s tempting to say that the probability is .95 that μ lies in our 95% CI. Some scholars permit such statements, while others regard them as wrong, misleading, and wicked. The trouble is that mention of probability suggests μ is a variable, rather than having a fixed value that we don’t know. Our interval either does or does not include μ, and so in a sense the probability is either 1 or 0. I believe it’s best to avoid the term “probability,” to discourage any misconception that μ is a variable. However, in my view it’s acceptable to say, “We are 95% confidence that our interval includes μ,” provided that we keep in the back of our minds that we’re referring to 95% of the intervals in the dance including μ, and 5% (the red ones) missing μ.

So in Cumming’s view, question #4 would still be False (because it misleads one to thinking that μ is a variable), but #5 would be True. Regardless, it seems clear that there is some debate about whether #4 and #5 are True or False. My personal belief is that it is okay to mark them both True. I’ve built a simple R example to demonstrate why.

# First, create 1000 datasets of N=100 each from a normal distribution.

set.seed(5)

sims <- 1000

datasets <- list()

for(i in 1:sims) {

datasets[[i]] <- rnorm(100)

}

# Now get the 95% confidence interval for each dataset.

out <- matrix(unlist(lapply(datasets, function(x) t.test(x)$conf.int)), ncol=2, byrow=T)

colnames(out) <- c("LL", "UL")

# Count the number of confidence intervals containing Mu

res <- ifelse(out[,1] <= 0 & out[,2] >= 0, 1, 0)

sum(res) / sims

# Thus, ~95% of our CIs contain Mu

This code creates 1000 datasets of N=100 by randomly drawing scores from a normal distribution with μ = 0 and σ = 1. It then computes a 95% confidence interval for the mean for each dataset. Lastly, it counts how many of those contain μ (0). In this case, it is just about 95%.[2] This is precisely the definition of a confidence interval provided by Hoekstra et al. If we repeat an experiment many times, 95% of our confidence intervals should contain μ. However, if we were just given one of those confidence intervals (say, at random) there would also be a 95% chance it contains μ. So if we think of our study, and its confidence interval, as one of many possible studies and intervals, we can be 95% confident that this particular interval contains the population value.

Moreover, this notion can be extended beyond a single experiment. That is, rather than thinking about repeating the same experiment many times, we can think of all of the different experiments (on different topics with different μs) we conduct and note that 95% of them will contain μ within the confidence interval, but 5% will not. Therefore, while I (think) I understand and appreciate why Hoekstra et al. consider the answers to #4 and #5 to be False, I disagree. I think that they are practically useful interpretations of a CI. If it violates all that is statistically holy and sacred, then damn me to statistical hell.

Despite this conclusion, I do not mean to undermine the research by Hoekstra et al. Indeed, my point has little bearing on the overall conclusion of their paper. Even if questions #4 and #5 were removed, the results are still incredibly disturbing and suggest that we need serious revisions to our statistical training.

[1] The last sentence of the instructions makes it clear that it is possible that all True and all False are possibilities. How many people actually believed that instruction is another question.

[2] Just for fun, I also calculated the proportion of times a given confidence interval contains the sample mean from a replication. The code you can run is below, but the answer is about 84.4%, which is close to Cummings’ (p. 128) CI Interpretation 6 “Prediction Interval for a Replication Mean” of 83%.

# Now get the sample Means Ms <- unlist(lapply(datasets, mean)) # For each confidence interval, determine how many other sample means it captured reptest <- sapply(Ms, function(x) ifelse(out[,1] <= x & out[,2] >= x, 1, 0)) # Remove the diagonal to avoid double-counting diag(reptest) <- NA # Now summarize it: mean(colMeans(reptest, na.rm=T)) # So ~ 84.4% chance of a replication falling within the 95% CI